pardon the long, nerdy geeked out mobile digital photography upchuck post. Your eyes may gloss over two sentences in, but I love this stuff. Yes, I know I am being nit picky... but

A developer recently modified the Google Camera to allow it to work on most any phone that was powered by the SD820 or newer (as they had the necessary ISP chip to handle things). Well, the G6 was on that list. Now, some of you may know that I don't exactly have the highest opinion of the G6's camera. LG's processing cooks pictures far too heavily and leaves them looking fake... that, combined with the fact that the small sensor requires substantially longer exposure times, well...

The Google Camera is about as bare bones as you can find for a camera; not a lot of modes, not a lot of adjustments, just the basics... but... it also has, by far, the mobile industry's best image processing system in HDR+.

Quick tech lesson. HDR+ is a vastly different mode than the HDR that you see on other cameras. It takes a drastically different approach to image processing and draws in elements of astrophotography where information taken from the image itself is paramount. It also results in images that are less artificially processed and have more depth and look more real.

I skipped a lot ... I can explain more to anyone that wishes to learn.

OK...back to the topic... Someone opened up the Google Camera and tweaked it so that it will run on phones outside the Pixel and Nexus lines. I immediately downloaded it and installed it. Now, it's buggy and some of the functions will crash (dev has issued a few updates since) and others (wide angle camera) don't even exist it in, but I wanted to use it as an exercise to see how many of my gripes are with LG's hardware (the camera sensors are far too small for today's market, IMHO) or with their software.

Now, here is an example of the different between how the Google Camera (via HDR+) processes an image versus LG's own method. Shots in bright sunlight, similar exposure and ISO values. This is a small crop, blown up 200% so as to make the processing artifacts more clear. The left is from LG's stock camera app in auto mode with HDR. The right is the Google Camera with HDR+

The difference in how heavily processed the image is here is pretty clear. LG overshoots the sharpening leading to a lot of halos... you can see those on the blue umbrella on the right ide of each image... the white trim and cap have distinct black borders. When zoomed back out, this oversharpening gives the appearance of a clearer picture, but its processing playing tricks on you. Now on to the other side of the processing coin; noise reduction. Again focus on the blue umbrella. Notice how the subtle creasing is nearly gone on the left pane, how the sand has lost nearly all of the shading details... and lastly, our poor little skyrat... sorry.. .seagull... is processed within an inch of its life. Photo quality is often subjective, but it's clear, at least to me, that the crop on the right looks like a photograph and the one on the left like a digital representation of one.

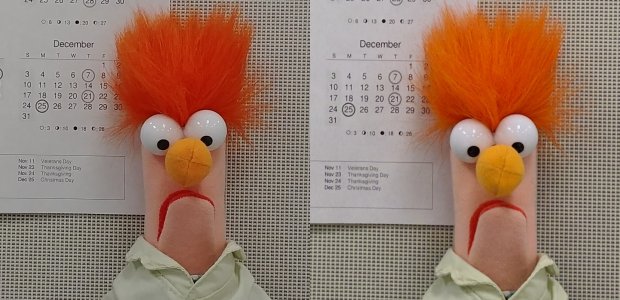

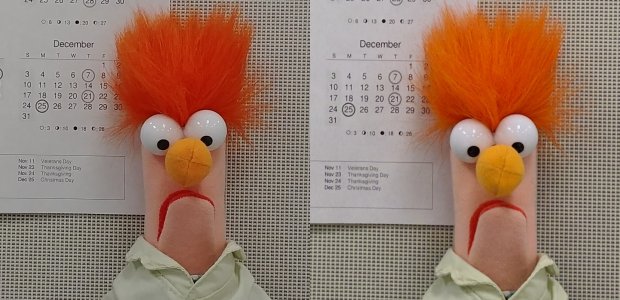

OK.. Next example. My trusty photo test rig, with my boy Beak helping me out. The left is the stock LG camera (HDR was not triggered - I forced HDR on a second pic but there was zero difference in output), 1/40 @ ISO85. Left is the Google Camera (HDR+ is enabled, as it will be for any static image in auto mode) 1/120s @ ISO 376

With regards to the brightness (which I did not alter), one would think that the LG camera, exposed three times longer, should be brighter. But processing makes all the difference... The Google Camera rebuilds the image from information in the the set, so it can increase brightness without artificial processing.

OK... Now, objectively, the LG image on the left is sharper with less noise. But that comes at a cost (I think). You can see substantial halos on the text that creates weird highlighting. Second, it could not distinguish between noise and the texture of the paper, so it choose poorly and removed it. And nearly lots detail in the hair is lost on the left. As for color, while hard to judge since my monitor is not calibrated, the Google image is pretty spot on.

I have more examples, which I might throw in subsequent replies here if anyone cares to look or discuss. But in conclusion, the one thing I took away from is that LG is doing a really crap job in post processing. Granted, short of getting Google to let them use HDR+, they aren't going to be able to replicate it fully.. but it's clear that the actual hardware, while still limited, is capable of far better quality pictures than what we currently have. Keep in mind that the Google Camera app has been optimized to a particular set up hardware (Nexus and Pixel line), so there has been zero fine tuning for the G6, the dev only tweaked it so it would run....

A developer recently modified the Google Camera to allow it to work on most any phone that was powered by the SD820 or newer (as they had the necessary ISP chip to handle things). Well, the G6 was on that list. Now, some of you may know that I don't exactly have the highest opinion of the G6's camera. LG's processing cooks pictures far too heavily and leaves them looking fake... that, combined with the fact that the small sensor requires substantially longer exposure times, well...

The Google Camera is about as bare bones as you can find for a camera; not a lot of modes, not a lot of adjustments, just the basics... but... it also has, by far, the mobile industry's best image processing system in HDR+.

Quick tech lesson. HDR+ is a vastly different mode than the HDR that you see on other cameras. It takes a drastically different approach to image processing and draws in elements of astrophotography where information taken from the image itself is paramount. It also results in images that are less artificially processed and have more depth and look more real.

I skipped a lot ... I can explain more to anyone that wishes to learn.

OK...back to the topic... Someone opened up the Google Camera and tweaked it so that it will run on phones outside the Pixel and Nexus lines. I immediately downloaded it and installed it. Now, it's buggy and some of the functions will crash (dev has issued a few updates since) and others (wide angle camera) don't even exist it in, but I wanted to use it as an exercise to see how many of my gripes are with LG's hardware (the camera sensors are far too small for today's market, IMHO) or with their software.

Now, here is an example of the different between how the Google Camera (via HDR+) processes an image versus LG's own method. Shots in bright sunlight, similar exposure and ISO values. This is a small crop, blown up 200% so as to make the processing artifacts more clear. The left is from LG's stock camera app in auto mode with HDR. The right is the Google Camera with HDR+

The difference in how heavily processed the image is here is pretty clear. LG overshoots the sharpening leading to a lot of halos... you can see those on the blue umbrella on the right ide of each image... the white trim and cap have distinct black borders. When zoomed back out, this oversharpening gives the appearance of a clearer picture, but its processing playing tricks on you. Now on to the other side of the processing coin; noise reduction. Again focus on the blue umbrella. Notice how the subtle creasing is nearly gone on the left pane, how the sand has lost nearly all of the shading details... and lastly, our poor little skyrat... sorry.. .seagull... is processed within an inch of its life. Photo quality is often subjective, but it's clear, at least to me, that the crop on the right looks like a photograph and the one on the left like a digital representation of one.

OK.. Next example. My trusty photo test rig, with my boy Beak helping me out. The left is the stock LG camera (HDR was not triggered - I forced HDR on a second pic but there was zero difference in output), 1/40 @ ISO85. Left is the Google Camera (HDR+ is enabled, as it will be for any static image in auto mode) 1/120s @ ISO 376

With regards to the brightness (which I did not alter), one would think that the LG camera, exposed three times longer, should be brighter. But processing makes all the difference... The Google Camera rebuilds the image from information in the the set, so it can increase brightness without artificial processing.

OK... Now, objectively, the LG image on the left is sharper with less noise. But that comes at a cost (I think). You can see substantial halos on the text that creates weird highlighting. Second, it could not distinguish between noise and the texture of the paper, so it choose poorly and removed it. And nearly lots detail in the hair is lost on the left. As for color, while hard to judge since my monitor is not calibrated, the Google image is pretty spot on.

I have more examples, which I might throw in subsequent replies here if anyone cares to look or discuss. But in conclusion, the one thing I took away from is that LG is doing a really crap job in post processing. Granted, short of getting Google to let them use HDR+, they aren't going to be able to replicate it fully.. but it's clear that the actual hardware, while still limited, is capable of far better quality pictures than what we currently have. Keep in mind that the Google Camera app has been optimized to a particular set up hardware (Nexus and Pixel line), so there has been zero fine tuning for the G6, the dev only tweaked it so it would run....